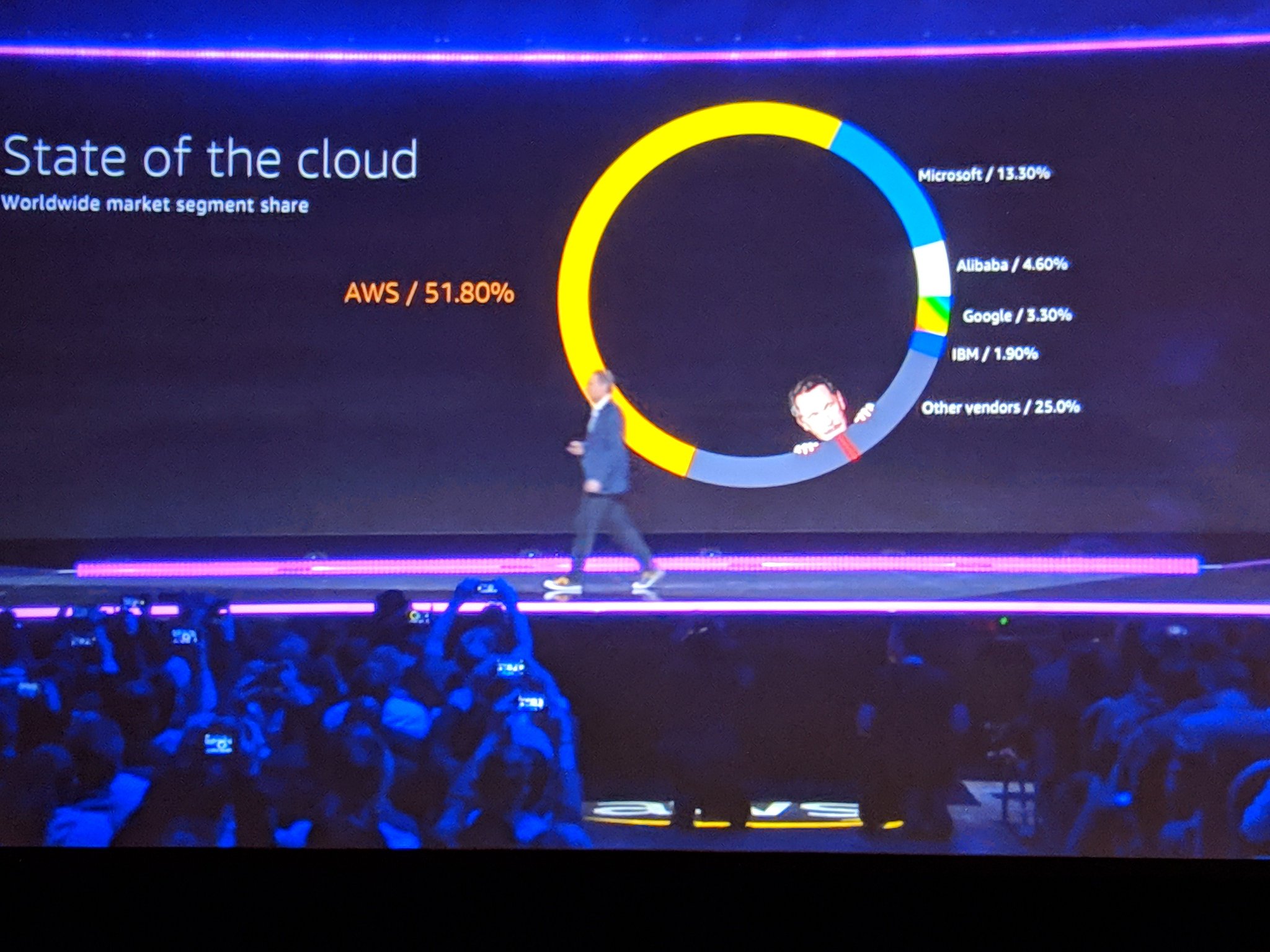

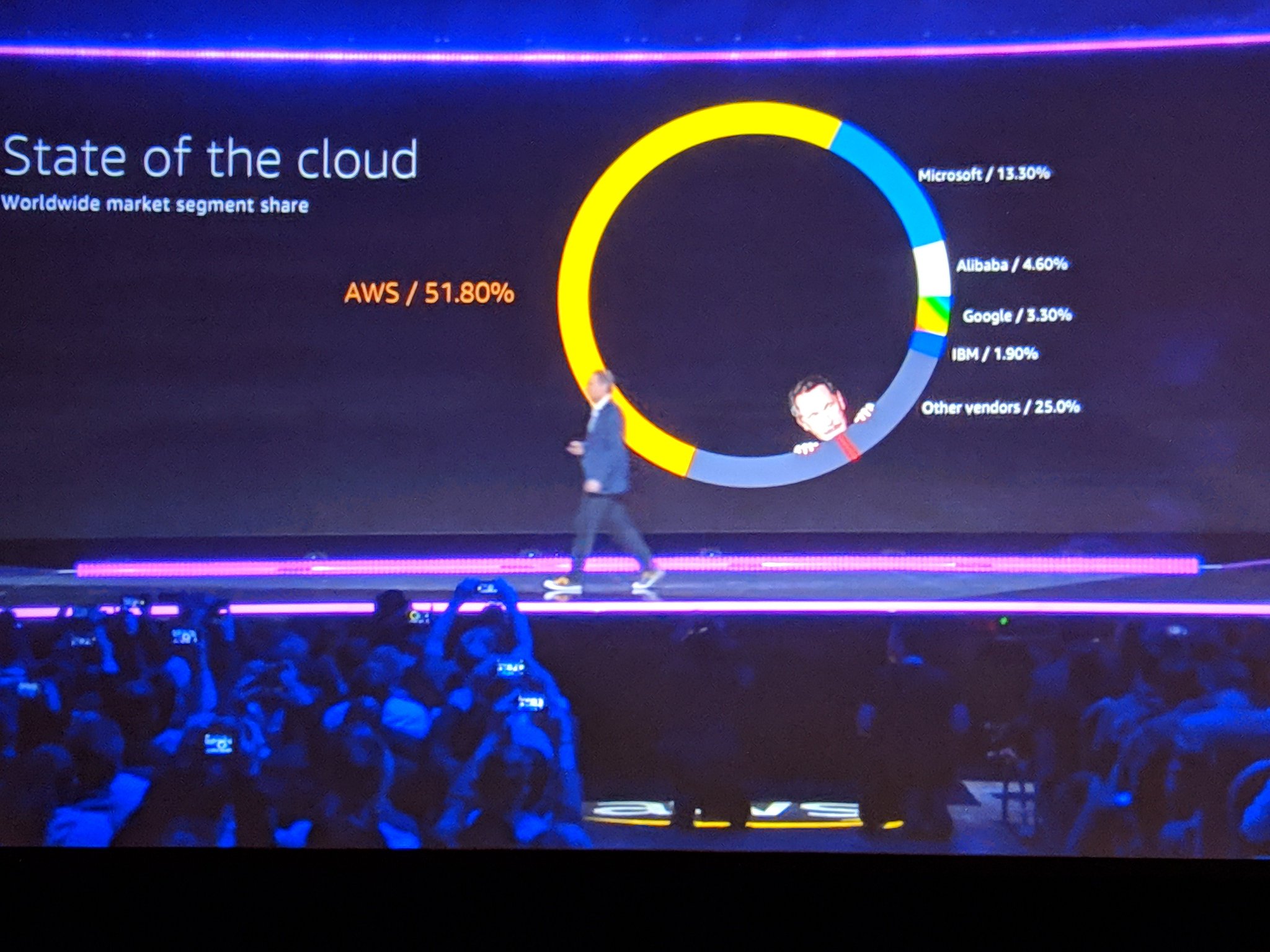

Sorry for the late post this week. I am attending AWS re:Invent (Links to an external site.)Links to an external site. (if you are interested, follow me on twitter @rbocchinfuso (Links to an external site.)Links to an external site., if you are here DM me). Yesterday afternoon and last night I was a bit distracted by my first touch of an Amazon DeepRacer. (Links to an external site.)Links to an external site.

![]()

https://venturebeat.com/2018/11/28/amazon-launches-autonomous-racing-league-and-399-deepracer-car/ (Links to an external site.)Links to an external site.

I’ve had a love affair with the Donkey Car (Links to an external site.)Links to an external site. for a few years, but Amazon’s DeepRacer is the Tesla of 1/18th scale autonomous vehicles. Very cool stuff for geeks like myself.

On Monday and Tuesday I couldn’t stop playing with connecting chatbots I had already developed with Amazon Sumerian hosts (Links to an external site.)Links to an external site.. The ability to take bot, have it leverage Amazon Poly (Links to an external site.)Links to an external site. and Sumerian (Links to an external site.)Links to an external site. to echo a response via a 3D avatar is just pretty damn cool.

Anyway, today is day four of AWS re:Invent and I’ll be sad when it’s over, but back next year for more fun, more learning, more game-changing announcements and another round of where’s Larry, this is where Andy Jassy makes fun of Larry Ellison. (https://twitter.com/rbocchinfuso/status/1067816905975652352) (Links to an external site.)Links to an external site.

Now for my responses to this weeks discussion questions.

When a crisis project occurs, who should be the leader of the crisis team?

The obvious solicited answer here is the “Project Manager” and while I agree that the “Project Manager” may sometimes be the appropriate person to lead the crisis team, but this is not a default standard. Much like a crisis management plan is a tool, not a blueprint, there is no blueprint for who should lead a crisis team. (Coombs, 2007, p. 4) The person who should lead the crisis team should possess a deep understanding of the project, the business, the crisis and should possess credibility with both the project team and key stakeholders, depending on the project this could be an engineer, it could be a senior manager, it could be a key stakeholder, it could be the CEO. Leadership in a crisis requires fortitude, accountability, professionalism and a positive mental attitude. (Newlands, 2014) James Burke, the CEO of Johnson & Johnson managed J&J through the Tylenol poisoning crisis. Burke was the person most equipped to manage this crisis, having to manage communication with a list of stakeholders which included stockholders, lending institutions, employees, managers, suppliers, government agencies, and consumers. (Kerzner, 2017, p. 447)

Will there be a crisis committee or a crisis project sponsor?

Coombs (2007) states that a crisis management team might be comprised of representatives from public relations, legal, security, operations, finance, and human resources. While I agree that input and engagement from each of these respective areas are important, my personal opinion is don’t believe a crisis can be effectively managed via committee, the crisis manager has to be careful here. While just about everything you read will say to create, identify or engage a crisis committee or crisis team I find this to be something that sounds good but practically crises are managed by individuals and risk is shared via committee. Should the crisis leader build support among stakeholders? Absolutely. Should the crisis leader solicit the opinions and engagement from department heads, management staff and other key stakeholders? Absolutely. Does support and engagement from team members make the crisis leader any less accountable? Absolutely NOT. All too often committees are used as an excuse for failure, the person leading the crisis team should be capable of soliciting and evaluating feedback, making decisions and owning the outcome. The wrong choice for a crisis leader is the individual who responds to failure with “I asked everyone’s opinion and we all agreed.” (Kerzner, 2017, p. 447)

How important is effective communication during a crisis?

Communication is one of the most important aspects of crisis management. (Maurer, 2014) Communication during the turmoil that accompanies a crisis is not easy, this is where a well-crafted crisis management plan which had pre-drafted communications, a communications timeline and communications mediums which may include web-based communications and mass communication systems which deliver concise crisis communication via phone, text messaging, voice messages, and e-mail. Media relations and the use of new outlets can also be an effective method of crisis communication. (Coombs, 2007, p. 7) For obvious reasons the individual dealing with the media needs to be skilled at doing so, “It is advised to designate a spokesperson, training them in dealing with the media, making sure all employees know who they are and how to direct the media to them.” (Bararia, 2018)

How important is stakeholder relations management during a crisis?

Communication and stakeholder relations management go hand and hand. It’s not likely that the individual designated to be the leader of the crisis team is not a good communicator. The crisis leader is going to be someone who has the credibility and likely established relationships to effectively managed stakeholder relations. A good crisis leader is typically going to be an excellent orator, able to communicate skillfully with stakeholders. While much of the communication during a crisis is directed by leadership, because crisis situations are stressful it is advisable to have a communication management plan that outlines how communications will occur, this includes but is not limited to internal communications, client communications, vendor communications, and media communications. (Maurer, 2014) We can see the clear value of how Johnson & Johnson, and CEO, James Burke managed stakeholder relations during the Tylenol poisoning crisis vs. how Vladamir Putin handled the Russian Submarine Kursk crisis. (Kerzner, 2017, p. 445 – 453)

Should a company immediately assume responsibility for a crisis?

YES! All you need to do is look at the Public Opinion View of Crisis Managment table in this in this weeks case study to know that not immediately assuming responsibility is a bad idea. (Kerzner, 2017, p. 454) We can see clear differences in how Johnson & Johnson and James Burke, CEO reacted to responsibility for the Tylenol poisonings vs all others. Burkes swift action, ownership and accountability, communication (internal and external) made J&J a victim in the court of public opinion. Personally, I think the CEO’s involvement and accountability in the Tylenol crisis made a huge difference. We see differences in how the companies who were viewed as villains communicated, the level of accountability and how swift their reaction was. It’s clear that the assumption of responsibility by an organization is critical, The Volkswagen clean diesel scandal is a great example of what happens when the crisis manager, the CEO, Matthias Müller in the case of Volkswagen attempts every trick in the book to avoid taking responsibility, to be fair he would have been admitting he was a criminal. (Atiyeh, 2018)

How important is response time when a crisis occurs?

Very important, with response time and accountability being linked to perception and reality. When a crisis occurs engagement has to be swift, well orchestrated but swift. The longer the crisis marinates without clear and transparent communication the more likely opinions will form. “It takes years to build a solid corporate reputation, but only hours to dismantle it.” (Holsberg, 2013) The faster the response to a crisis the more likely it is that the escalation can be controlled. “A well thought-out plan can help hotel management respond and control damage to the organization’s reputation, financial condition, market share, and brand value.” (Barton, 1994) To facilitate an expeditious crisis response an organization should have a crisis management plan, during a crisis a quick response can be difficult for obvious reasons, but a crisis management plan which clearly outlines a response and the necessary actions can help maintain control during a time of turmoil.

References

Aramyan, P. (2016, August 12). 5 Crisis Management steps for PMs to take during hardships. Retrieved November 28, 2018, from https://explore.easyprojects.net/blog/5-steps-of-crisis-management-that-project-managers-should-undertake-during-hardships

Atiyeh, C. (2018, October 9). Everything You Need to Know about the VW Diesel-Emissions Scandal. Retrieved November 28, 2018, from https://www.caranddriver.com/news/everything-you-need-to-know-about-the-vw-diesel-emissions-scandal

Bararia, R. (2018, March 19). Significance of Crisis Communications – Internal and External. Retrieved November 28, 2018, from http://reputationtoday.in/little-joys/significance-of-crisis-communications-internal-and-external/

Barton, L. (1994). Crisis management: Preparing for and managing disasters. Cornell Hotel and Restaurant Administration Quarterly, 35(2), 59-65. doi:10.1016/0010-8804(94)90020-5

Coombs, T. W. (2007). Crisis management and communications. Retrieved November 28, 2018, from http://195.130.87.21:8080/dspace/bitstream/123456789/96/1/Crisis%20management%20and%20communications%20Coombs.pdf

Holsberg, M. (2013, July 1). Your Solution for SMART Response Plans. Retrieved November 28, 2018, from https://www.emergency-response-planning.com/blog/bid/59934/The-Critical-Response-Time-of-Crisis-Management-Planning

Kerzner, H. (2017). Project Management Case Studies (5th ed.). Hoboken, NJ: John Wiley & Sons, Incorporated.

Mallak, L. A., Kurstedt, H. A., & Patzak, G. A. (1997). Planning for crises in project management. Project Management Journal, 28(2), 14–20.

Maurer, R. (2014, October 7). Communicate Effectively in a Crisis. Retrieved November 28, 2018, from https://www.shrm.org/resourcesandtools/hr-topics/risk-management/pages/communicate-effectively-crisis.aspx

Newlands, M. (2014, August 23). 5 Things Successful Leaders Do in a Crisis. Retrieved November 28, 2018, from https://www.inc.com/murray-newlands/5-things-successful-leaders-do-in-a-crisis.html