FIT – MGT5157 – Week 5

The submissions for this assignment are posts in the assignment’s discussion. Below are the discussion posts for Richard Bocchinfuso, or you can view the full discussion.

Discussion: What is the market for DNS control? Who are the big players in managing domain names? Can domain names be exploited?

The market for DNS control is competitive. There is more to DNS control than just owning the DNS resolution, companies like GoDaddy (Links to an external site.)Links to an external site. are domain registrars, but they also provide services which leverage those domains, services like web hosting and email. Organizations like GoDaddy started as registrars and grew into internet service providers, the same is true of organizations like AWS who started as service providers and saw an opportunity to be the domain registrar so AWS started a service called Route53 (cool name because port 53 is the port that DNS runs on).

Domain names are controlled by ICANN (Links to an external site.)Links to an external site. (Internet Corporation for Assigned Names and Numbers). ICANN is a non-profit organization that acts as the governing body tracking domain names maintained by domain name registrars like GoDaddy and NameCheap. The ICANN database master domain name database can be queried using “whois”.

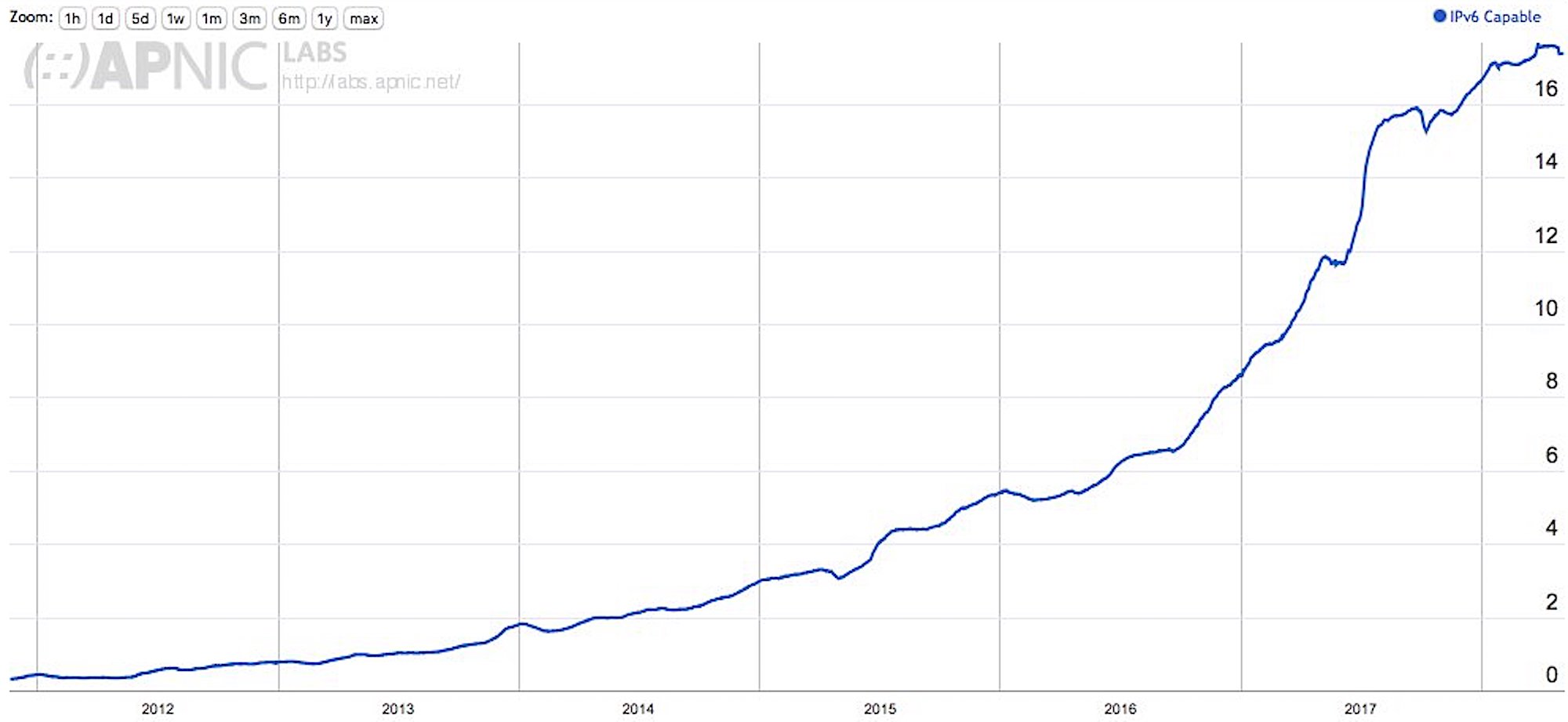

Authoritative DNS root servers are controlled by only a few key players, these hostnames actually point to an elaborate network or DNS servers around the world.

Source: Iana. (2018, August 3). Root Servers. Retrieved August 3, 2018, from https://www.iana.org/domains/root/servers

It’s not hard to understand why VeriSign is at the top of the list when you understand the relationship between ICANN and VeriSign. As you look down the list, not surprisingly there is a correlation between the authoritative DNS root servers and Class A address ownership. WIth the DoD owning 12 Class A addresses you would imagine they would have an authoritative root DNS server.

Source: Pingdom. (2008, February 13). Where did all the IP numbers go? The US Department of Defense has them. Retrieved August 3, 2018, from https://royal.pingdom.com/2008/02/13/where-did-all-the-ip-numbers-go-the-us-department-of-defense-has-them/

Querying the ICANN database for s specific domain name will return relevant information about the domain name as well as the registrar.

Above we can see that a “whois bocchinfuso.net” reveal the registrar as NameCheap, NameCheap IANA ID, etc…

Each domain registrar is assigned a registrar IANA (Internet Assigned Numbers Authority) ID by ICANN.

DomainState (Links to an external site.)Links to an external site. tracks statistics about domain registrars so we can easily see who the major registrars are.

Source: DomainState. (2018, August 3). Registrar Stats: Top Registrars, TLD Marketshare, Top Registrars by Country. Retrieved August 3, 2018, from https://www.domainstate.com/registrar-stats.html

GoDaddy is ~ 6x larger than the number two registrar. GoDaddy has grown to nearly 60 million registered domains both organically and through acquisition.

Yes, DNS can be exploited. DNS allows attackers to more easily identify their attack vector. DNS servers are able o perform both forward (mapping a DNS name to an IP address) and reverse lookups (mapping an IP address to a DNS name) this allows attackers to open the internet phone book, easily acquire a target and commence an advanced persistent threat (APT).

Domain names are often linked with branding, so once an APT commences against a domain the resident can’t move. DNS can also play a role in protecting against threats. Services like Quad9 (Links to an external site.)Links to an external site. and OpenDNS (Links to an external site.)Links to an external site. provide DNS resolvers which are security aware. These DNS resolvers block access to malicious domains.

Because DNS names are how we refer to internet properties typosquatting (Links to an external site.)Links to an external site.is a popular DNS threat. Typosquatting is a practice where someone uses a DNS name that is similar to a popular domain name capturing everyone who typos the popular domain name.

DNS servers are ideal DDoS (Links to an external site.)Links to an external site. attack targets because the inability to resolve DNS addresses has an impact across the entire network.

Registrar of domain hijacking (Links to an external site.)Links to an external site. is when the attacker gains access to your domain by exploiting the registrar. Once the attacker has access to the domain records they can do anything from changing the A record to a new location to transferring the domain to a new owner. There are safeguards that can be put in place to protect unauthorized transfers, but someone gaining access to your registrar is not a good situation.

DNS is massive directory and to decrease latency DNS caches are placed strategically around the Internet. These caches can be compromised by an attacker and resolved names may take an unsuspecting user to a malicious website. This is called DNS spoofing or cache poisoning. (Links to an external site.)Links to an external site.

These are just a few DNS attack vectors, there are plenty of others. The convenience of DNS is also what creates the risk. DNS makes it easy for us to find our favorite web properties like netfix.com, but it also makes it easy for an attacker to find netflix.com.

References

DomainState. (2018, August 3). Registrar Stats: Top Registrars, TLD Marketshare, Top Registrars by Country. Retrieved August 3, 2018, from https://www.domainstate.com/registrar-stats.html

Iana. (2018, August 3). Root Servers. Retrieved August 3, 2018, from https://www.iana.org/domains/root/servers

ICANN. (2018, August 3). ICANN64 Fellowship Application Round Now Open. Retrieved August 3, 2018, from https://www.icann.org/

Mohan, R. (2011, October 5). Five DNS Threats You Should Protect Against. Retrieved August 3, 2018, from https://www.securityweek.com/five-dns-threats-you-should-protect-against

Pingdom. (2008, February 13). Where did all the IP numbers go? The US Department of Defense has them. Retrieved August 3, 2018, from https://royal.pingdom.com/2008/02/13/where-did-all-the-ip-numbers-go-the-us-department-of-defense-has-them/

Carmeshia, I enjoyed your post. You bring up an interesting point regarding centralization, control, and exploitation. What do you think is more secure, a centralized or decentralized DNS registrar system?

With the increase in APTs (advanced persistent threats) I tend to favor decentralization, but everyone has a perspective, interested in hearing yours.

Nawar, good post, I enjoyed reading it. While DNS is not a security-centric protocol, few protocols are. The network’s reliance on DNS is both a good and bad thing. Because DNS name resolution is such a critical network function, it is the target of attacks like DDoS attacks because the blast radius of an attack on DNS is significant. With this said the essential nature of DNS also has many focused on protecting and mitigating risk. Services like Cloudflare (Links to an external site.)Links to an external site., Akamai (Links to an external site.)Links to an external site., Imperva Incapsula (Links to an external site.)Links to an external site., Project Shield (Links to an external site.)Links to an external site. and others have built robust Anti-DDoS system to identify and shed DDoS traffic.

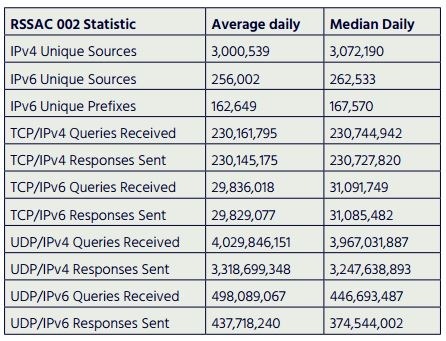

Sharing some pretty interesting data when comparing the top DNS providers.

When you start to segment domains by Alexa rank (Links to an external site.)Links to an external site. GoDaddy gets outranked by Cloudflare, Amazon Route 53, Akamai, and Google DNS pretty consistently.

Some good detail on why in this article: https://stratusly.com/best-dns-hosting-cloudflare-dns-vs-dyn-vs-route-53-vs-dns-made-easy-vs-google-cloud-dns/ (Links to an external site.)Links to an external site.

The moral of the story here is that while GoDaddy appears to the Goliath, they are in terms of domain name registration volume, but the FANG (Facebook, Apple, Netflix, Google) type companies (Links to an external site.)Links to an external site. own the internet traffic the volume DNS registration game is becoming a commodity. GoDaddy has the first mover advantage but competitors like namecheap.net (Links to an external site.)Links to an external site.and name.com (Links to an external site.)Links to an external site. are coming after them. With Netflix accounting for nearly 40% of all internet traffic (Links to an external site.)Links to an external site., the FANG companies matter, and I don’t think the Cloudflare’s, Akamai’s, Amazon Route 53’s of the world want to chase the GoDaddy subscriber base.