Discussion: Describe the differences between IPv6 and IPv4. What implications does it have on networks? On the user? What could be done to speed up the transition process?

First let’s talk about a major catalyst for the development and adoption of IPv6, the idea that the internet would exhaust the available IP address space. This prediction was made back in 2011 and it was stated the Internet would exhaust all available IP addresses by 4 AM on February 2, 2011. (Kessler, 2011) Here we are 2725 days later and the “IPcalypse” or “ARPAgeddon” has yet to happen, in-fact @IPv4Countdown (Links to an external site.)Links to an external site. is still foreshadowing the IPv4 doomsday scenarios via twitter. So what is the deal? Well, it’s true the available IPv4 address space is limited and with a pool of addresses of slightly less than 4.3 billion (2^32, more on this later) (Links to an external site.)Links to an external site.. It is important to remember that many of these predictions predate Al Gore taking credit for creating the internet. Sorry Bob Kahn and Vint Cert (Links to an external site.)Links to an external site., it was Al Gore who made this happen.

Back in the 1990s we didn’t have visibility to technologies like CIDR (Classless Interdomain Routing) (Links to an external site.)Links to an external site. and NAT (Network Address Translation) (Links to an external site.)Links to an external site.. In addition many us today use techniques like reverse proxying and proxy ARPing. Simplistically this allows something like NGINX (Links to an external site.)Links to an external site. to act as a proxy (middleman) where all services can be placed on a single port behind a single public IP address and traffic can be appropriately routed and proxied using a single public IP address.

For example, a snippet of an NGINX reverse proxy config might look something like this:

server {

listen 80;

server_name site.foo.com;

location / {

access_log on;

client_max_body_size 500 M;

proxy_pass http: //INTERNAL_HOSTNAME_OR_IP;

proxy_set_header X - Real - IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X - Forwarded - For $proxy_add_x_forwarded_for;

}

}

server {

listen 80;

server_name site.bar.com;

location / {

access_log on;

client_max_body_size 500 M;

proxy_pass http: //INTERNAL_HOSTNAME_OR_IP;

proxy_set_header X - Real - IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X - Forwarded - For $proxy_add_x_forwarded_for;

}

}

Let’s assume that there are two DNS A records (Links to an external site.)Links to an external site., one for site.bar.com and one for site.foo.bar that both point to the same IP address with a web server running on port 80 on both machines. How does site.bar.com know to go to web server A and site.foo.bar know to go to web server B? The answer is a reverse proxy which can proxy the request, this is what we see above.

I use this configuration from two sites which I host bocchinfuso.net and gotitsolutions.org

A dig (domain information groper) of both of these domains reveals that their A records point to the same IP address, the NGINX (Links to an external site.)Links to an external site. reverse proxy does the work to route to the proper server or services based on the requested server name and proxies the traffic back to the client. nslookup would work as well if you would like to try but dig a little cleaner display for posting below.

$ dig bocchinfuso.net A +short 173.63.111.136 $ dig gotitsolutions.org A +short 173.63.111.136

NGINX (Links to an external site.)Links to an external site. is a popular web server, which can also be used for reverse proxying like I am using it above, as well as load-balancing.

IPv6 (Internet Protocol version 6) is the next generation or successor to IPv4 (Intenet Protocol version 4). IPv4 is responsible for assigning a numerical address using four octets which are each 8-bits to comprise a 32-bit address. IPv4 addresses are comprised of 4 numbers between 0 and 255.

Source: ZeusDB. (2015, July 30). Understanding IP Addresses – IPv4 vs IPv6.

IPv6 addresses consist of eight x 16-bit segments to comprise a 128-bit address, giving IPv6 a total address space of 2^128 (~ 340.3 undecillion) (Links to an external site.)Links to an external site. which is a pretty big address space. To put 2^128 into perspective it is enough available IP address space for every person on the planet to personally have 2^95 or about 39.6 octillion IP addresses (Links to an external site.)Links to an external site.. That’s a lot of IP address space.

Source: ZeusDB. (2015, July 30). Understanding IP Addresses – IPv4 vs IPv6.

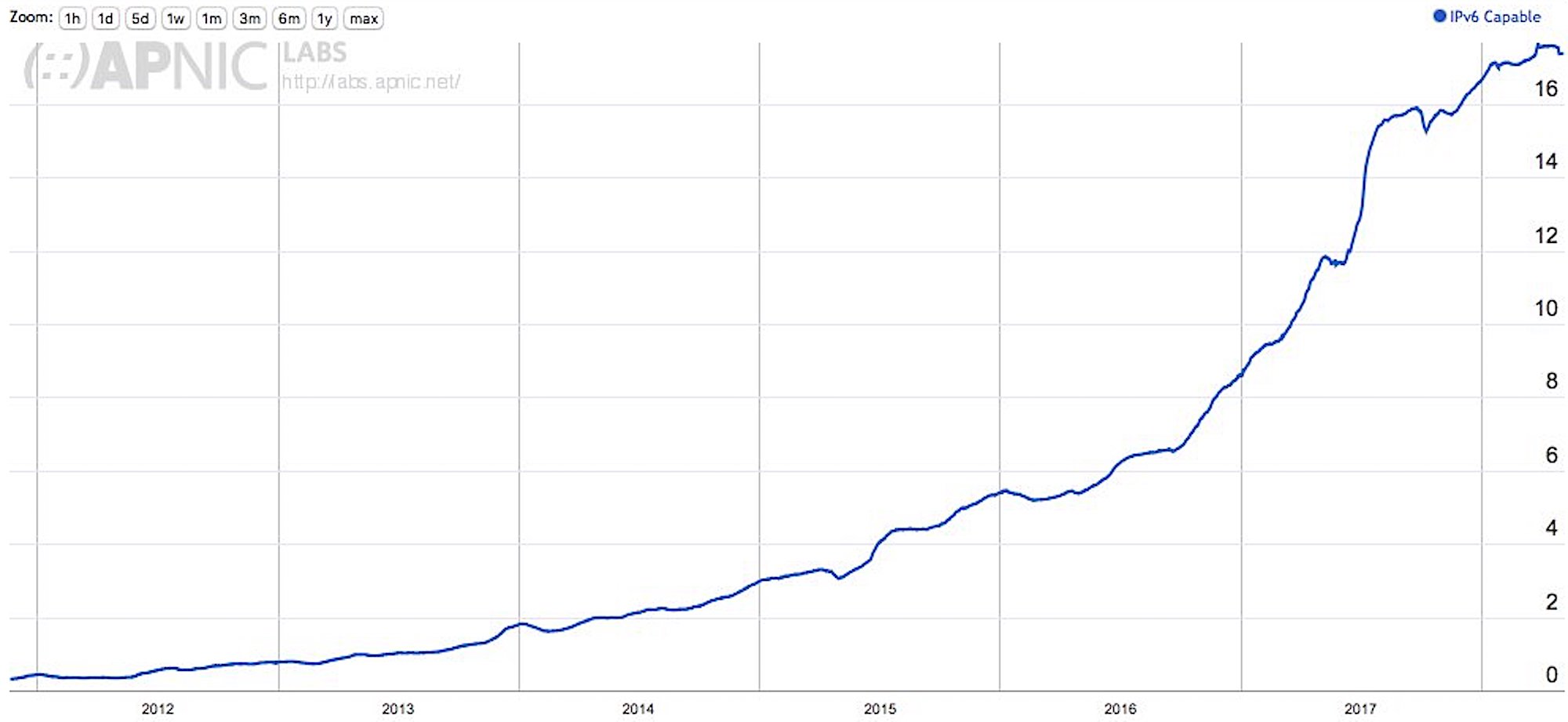

One of the challenges with IPv6 is that it is not easily interchangeable with IPv4, this has slowed adoption and with the use of proxy, tunneling, etc. technology I believe the sense of urgency is not what it once was. IPv6 adoption has been slow, but with the rapid adoption of IoT and the number of devices being brought online we could begin to see a significant increase in the IPv6 adoption rate. In 2002 Cisco forecasted that IPv6 would be fully adopted by 2007.

Source: Pingdom. (2009, March 06). A crisis in the making: Only 4% of the Internet supports IPv6.

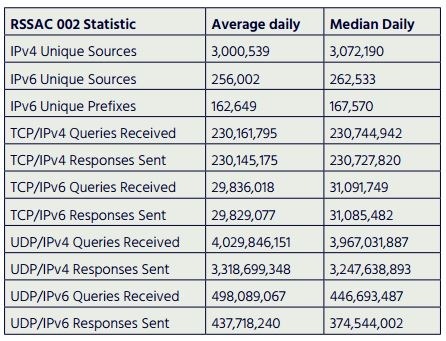

The Internet Society State of IPv6 Deployment 2017 paper states that ~ 9 million domains and 23% of networks are advertising IPv6 connectivity. When we look at the adoption of IPv6 I think this table does a nice job outlining the where IPv4 and IPv6 sit relative to each other.

Source: Internet Society. (2017, May 25). State of IPv6 Deployment 2017.

The move to IPv6 will be nearly invisible from a user perspective, our carriers (cable modems, cellular devices, etc…) abstract us from the underpinnings of how things work. Our request to google.com will magically resolve to an IPv6 address vs an IPv4 address and it won’t matter to the user.

For example here is a dig of google.com to return google[dot]com’s IPv4 and IPv6 address.

$ dig google.com A google.com AAAA +short 172.217.3.46 2607:f8b0:4004:80e::200e

Note: If you’re a Linux user you know how to use dig, MacOS should have dig and if you’re on Windows and don’t already know how to get access to dig the easier path can be found here: https://www.danesparza.net/2011/05/using-the-dig-dns-tool-on-windows-7/ (Links to an external site.)Links to an external site.

The adoption rate if IPv^ could be increased by simplifying interoperability between IPv4 and IPv6. The exhaustion of the IPv4 address space and the exponential increase in connected devices is upon us and this may be the catalyst the industry needs to simplify interoperability and speed adoption.

With the above said, interestingly IPv6 adoption is slowing.

McCarthy, K. (2018, May 22). IPv6 growth is slowing and no one knows why. Let’s see if El Reg can address what’s going on.

I think it’s a chicken or the egg situation. There have been IPv4 address space concerns for years, the heavy lift required to adopt IPv6 led to slow and low adoption rates which pushed innovation in a different direction. With the use of a reverse proxy maybe I don’t need any more public address space, etc… Only time will tell, but this is foundational infrastructure akin to the interstate highway system, change will be a long journey and it’s possible we will start to build new infrastructure before we ever reach the destination.

References

Hogg, S. (2015, September 22). ARIN Finally Runs Out of IPv4 Addresses. Retrieved July 20, 2018, from https://www.networkworld.com/article/2985340/ipv6/arin-finally-runs-out-of-ipv4-addresses.html

Internet Society. (2017, May 25). State of IPv6 Deployment 2017. Retrieved July 20, 2018, from https://www.internetsociety.org/resources/doc/2017/state-of-ipv6-deployment-2017/

Kessler, S. (2011, January 22). The Internet Is Running Out of Space…Kind Of. Retrieved July 20, 2018, from https://mashable.com/2011/01/22/the-internet-is-running-out-of-space-kind-of/#49ZaFObrqPqW

McCarthy, K. (2018, May 22). IPv6 growth is slowing and no one knows why. Let’s see if El Reg can address what’s going on. Retrieved July 20, 2018, from https://www.theregister.co.uk/2018/05/21/ipv6_growth_is_slowing_and_no_one_knows_why/

NGINX. (2018, July 20). High Performance Load Balancer, Web Server, & Reverse Proxy. Retrieved July 20, 2018, from https://www.nginx.com/

Pingdom. (2009, March 06). A crisis in the making: Only 4% of the Internet supports IPv6. Retrieved July 20, 2018, from https://royal.pingdom.com/2009/03/06/a-crisis-in-the-making-only-4-of-the-internet-supports-ipv6/

Pingdom. (2017, August 22). Tongue twister: The number of possible IPv6 addresses read out loud. Retrieved July 20, 2018, from https://royal.pingdom.com/2009/05/26/the-number-of-possible-ipv6-addresses-read-out-loud/

Wigmore, I. (2009, January 14). IPv6 addresses – how many is that in numbers? Retrieved July 20, 2018, from https://itknowledgeexchange.techtarget.com/whatis/ipv6-addresses-how-many-is-that-in-numbers/

ZeusDB. (2015, July 30). Understanding IP Addresses – IPv4 vs IPv6. Retrieved July 20, 2018, from https://www.zeusdb.com/blog/understanding-ip-addresses-ipv4-vs-ipv6/